When using chatbots based on Large Language Models (LLMs), users occasionally have to repeat their instructions, specify their prompts, or clarify the output they want to achieve. As a result, they may become frustrated and waste a lot of time trying to fine-tune their initial guidelines. This is why building AI agents capable of learning and augmenting their efficiency requires improving their real memory. In this guide, we will explore how AI agent memory allows developers to create powerful solutions and switch to fully autonomous products that remember the history of requests, retrieve data better, and understand prompts with high accuracy.

What is AI Agent Memory?

The term describes an AI agent’s ability to recall important data across time. This capacity enables AI bots to remember what things were discussed during prior conversations and what tasks were completed. This extensive knowledge allows them to adjust their behavior and generate more context-relevant replies. Extensive agentic memory facilitates building an internal state that gradually evolves and improves its functioning. Here are the key things one should be aware of:

- The state defines what occurs at the present moment.

- Persistence refers to an agent’s ability to retain knowledge across different interactions.

- Selection allows AI models to decide what facts they need to remember.

When these factors are combined, they enable agents to maintain continuity and produce useful responses.

Why is Memory Important?

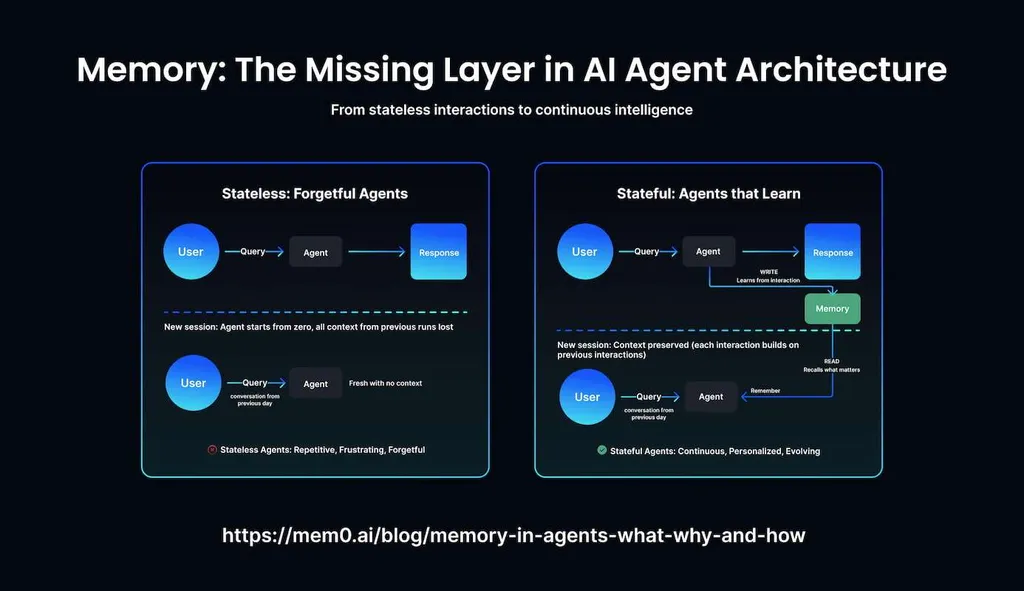

LLMs are stateless by default, which means that they are not designed to remember things. Memory enables AI agents to analyze past interactions and use newly found knowledge to serve customers better. They acquire the capability to retain the data provided by a customer and interpret conversations within a certain context. Their extensive functionality enables chatbots to remember the preferences of each client and provide personalized services across various communication channels.

AI agents deployed by travel agencies need to know whether a customer prefers to book direct flights to save time or often looks for flights with layovers. Such virtual assistants analyze the available amenities when booking accommodations and choose hotels that meet a client’s expectations. Besides, they should be able to recall details that were previously mentioned.

Types of AI Agent Memory

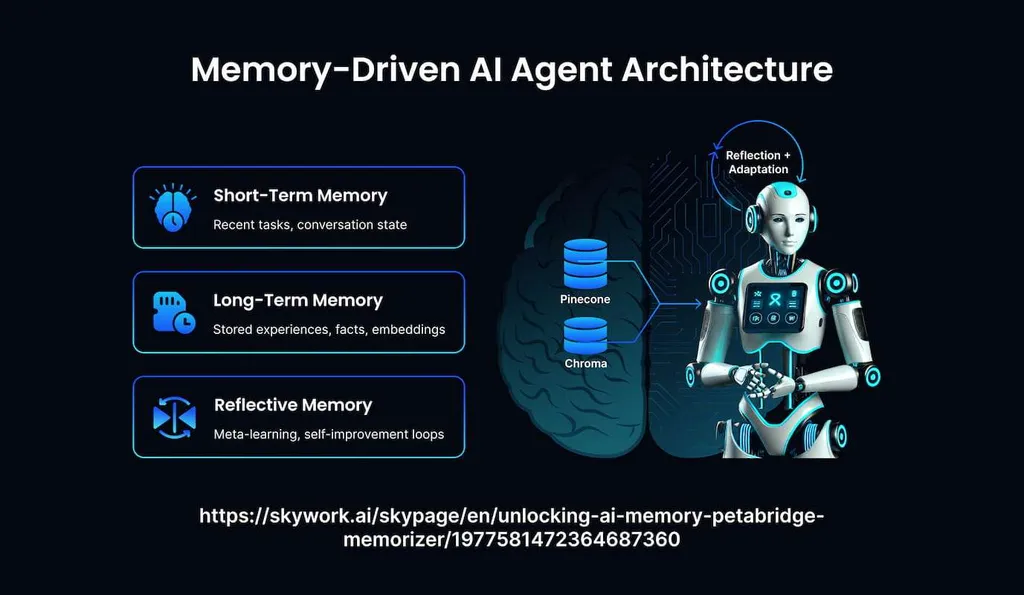

Short-term memory helps AI agents remember important details during an interaction and use a limited context window of an LLM. Complex agentic frameworks make it easier to access such memory and ensure an AI assistant will remember the context when a thread is open. Long-term memory facilitates storing data and retrieving it whenever it becomes necessary. It permits digital assistants to analyze feedback and learn from it. Besides, they can change their performance and replies depending on a client’s preferences. There are three subtypes of memory:

- Episodic. It keeps records of previous interactions with an AI bot and remembers user experiences. Thanks to it, AI assistants remember customer preferences.

- Procedural. It stores insights about acquired skills, the procedures mastered by an AI agent, and how-to guidelines. It enables bots to remember the most efficient way of booking flights or performing other tasks.

- Semantic. It retains general knowledge, including facts, ideas, and information about relationships. Bots use it to access visa requirements, provide replies about the best tourist destinations, or include an estimation of hotel costs in a reply.

AI products should decide which types of memory they need, what facts to retain, and when to retrieve specific details to generate an accurate reply. AI agent memory architecture is difficult to manage unless one understands how specific details will be used. A conversational AI bot should remember client preferences and use episodic memory. A digital assistant available on an e-commerce platform has to access information about products and utilize semantic memory to browse linked databases quickly and retrieve details about the items requested by a buyer.

How Does Memory Get Updated?

LLM context windows are quite limited. Besides, there is always a risk that the agent’s understanding of the backdrop will not be accurate enough. This is why it becomes necessary to store details safely. AI agents typically use several methods to do it:

- Summarization. The technique involves using LLM capabilities to summarize the key points of past conversations. The memory module updates the collected insights in real time, whenever new data is added. Summed up threads are saved as strings and extracted when it becomes necessary to put a new query in a context.

- Vectorization. It saves textual data as numerical representations that capture the essence of the words. The approach facilitates classifying insights into semantic chunks and retrieving them with high efficiency.

- Extraction. Instead of summarizing memories or segmenting them into digestible fragments, it’s possible to extract only the most crucial details and save them in an external knowledge base while retaining some details about their context.

- Graphication. The technique necessitates mapping data and recording information about the relationships between different entities and contexts. It facilitates structuring data and creating dynamic memory storage.

When an AI agent receives new information, it can choose any supported method to retain crucial details and augment performance.

How to Use AI Agent Memory?

Algorithm-based tools retrieve relevant information saved as chunks, using an LLM to determine which pieces of data matter the most. LLM essentially functions as a query generator, as it creates function-calling tokens and relies on vector search to discover insights that will help it answer a query. Developers prefer to add vector search features to their applications, as such capabilities allow apps to solve complex issues with ease.

When data becomes outdated, it’s crucial to decay it to avoid filling the storage with unnecessary data and ensure higher efficiency of the whole system. The built-in mechanism helps AI memory to forget details it no longer needs to use. It might be information about a user’s preferences that have changed and evolved since, or some facts. If memory is bloated with useless details, it may slow down retrieval times, make replies less accurate, and result in resource wastage. By implementing eviction and expiration guidelines, developers prevent memory decay. They also add timestamps to simplify search and make it easier to sort the outcomes.

The Difference Between Memory and RAG

Retrieval augmented generation (RAG) and memory systems are designed to retrieve data on behalf of LLMs. However, they were created for different purposes. The former allows AI bots to obtain external knowledge and use it when answering prompts. It is especially useful when a chatbot needs to find some facts in documents.

RAG is stateless, as it does not imply awareness of past conversations, user identity, or understanding of how different queries are related. In contrast, memory is all about continuity. It allows digital assistants to remember client preferences, previous requests, solutions, and issues. RAG helps AI helpers to provide better answers, while memory enables them to act more efficiently.

Final Thoughts

AI agent memory allows developers to build a resilient architecture with advanced knowledge filtering features, dynamic forgetting capabilities, and excellent recall speed. It facilitates creating AI assistants designed to maintain continuity across several conversations. Equipped with memory, bots remember how they solved specific issues in the past and provide personalized support. Digital personal assistants adjust their performance based on the newly acquired knowledge of clients’ habits. However, building them from scratch might require a significant investment. This is why many ventures entrust reputable providers with this task. MetaDialog creates custom LLMs for enterprises of all sizes. They can power powerful AI agents capable of remembering prior interactions. Get in touch with our team and learn how to deploy advanced AI solutions to optimize your workflows.