With the development of advanced Artificial Intelligence (AI) tools, companies started to look for efficient ways to ensure their uninterrupted performance. Algorithmic models, while powerful, often contain hidden vulnerabilities that can expose them to cyber threats and manipulation. When intelligent systems are utilized in real-world environments, they must operate efficiently and comply fully with established requirements. In this blog post, we’ll discover how AI red teaming allows experts to simulate potential attacks on apps and secure their stable functioning.

What is AI Red Teaming?

The term means the process of testing predictive models to be sure they are shielded against malicious intervention and possess advanced capabilities to prevent data leaks, eliminate inaccuracies, and avoid producing unsafe outputs. Entities need to utilize trusted AI solutions to prevent information leakage, eliminate discriminatory content, and enhance app performance. Building customer loyalty necessitates proactively fixing errors impacting the integrity of generated data and files. Enterprises should thoroughly test their AI apps to fine-tune and augment outputs.

Red teaming (RT) was originally deployed by the US military when running simulations to assess the performance of blue teams against their “red” adversaries. Since then, the approach has been used to contemplate what steps an enemy might take in a certain situation. IT professionals have adopted a similar practice to assess the safety of networks, analyze software flaws, and detect vulnerabilities that could be used by hackers to gain access to sensitive information.

Specialists utilize red team tools to test LLMs for a range of issues exploited by malicious individuals. Such features are also designed to unveil hallucinations, flag inappropriate outputs, and detect copyright infringement.

Deploying RT for GenAI tools involves checking whether they can be provoked into generating replies they were taught to avoid. The strategy facilitates exposing biases overlooked by creators. When a problem gets discovered, the developers feed the model with new instructions to adjust its performance. It allows them to enhance their safety and introduce improved security guardrails.

How Does AI Red Teaming Work?

When the first solutions based on GenAI were released, users started to discover new ways of bypassing their safety boundaries just for fun. They experimented with different prompts to circumvent the available safety filters or discover other ways of exploiting vulnerabilities. Adversarial testing enables developers to upgrade AI tools by deploying experts trained to unveil weaknesses and dangerous limitations.

The approach proved to be quite effective when it comes to uncovering weaknesses. However, organizations should also deploy dedicated tools to streamline training and boost the profile of LLMs. The method facilitates the improvement of AI platforms, making it an invaluable part of every efficient cybersecurity strategy.

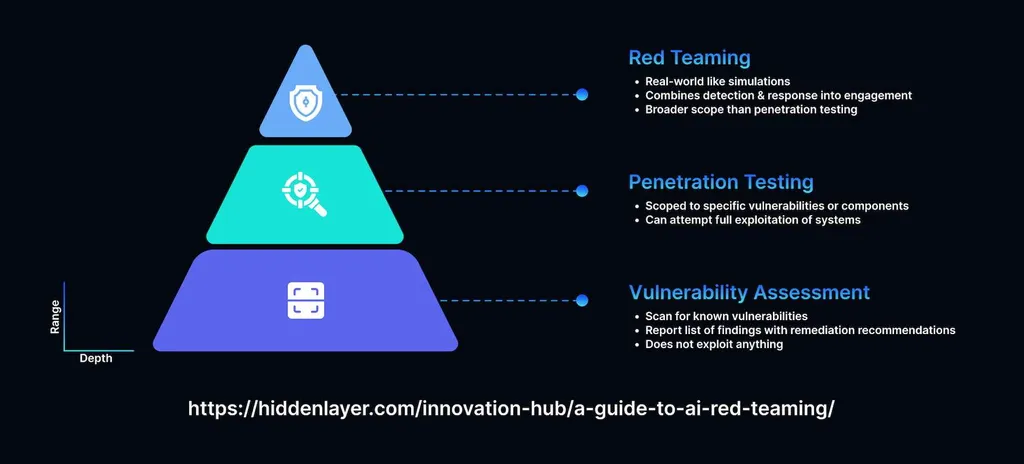

Simulating adversarial attacks enables teams to stress-test the performance of AI apps under operational conditions. Threat modeling has demonstrated better results than the classic penetration testing approach often used by developers. It enables testers to analyze algorithmic solutions from the perspective of adversaries and identify issues that hinder a model’s performance.

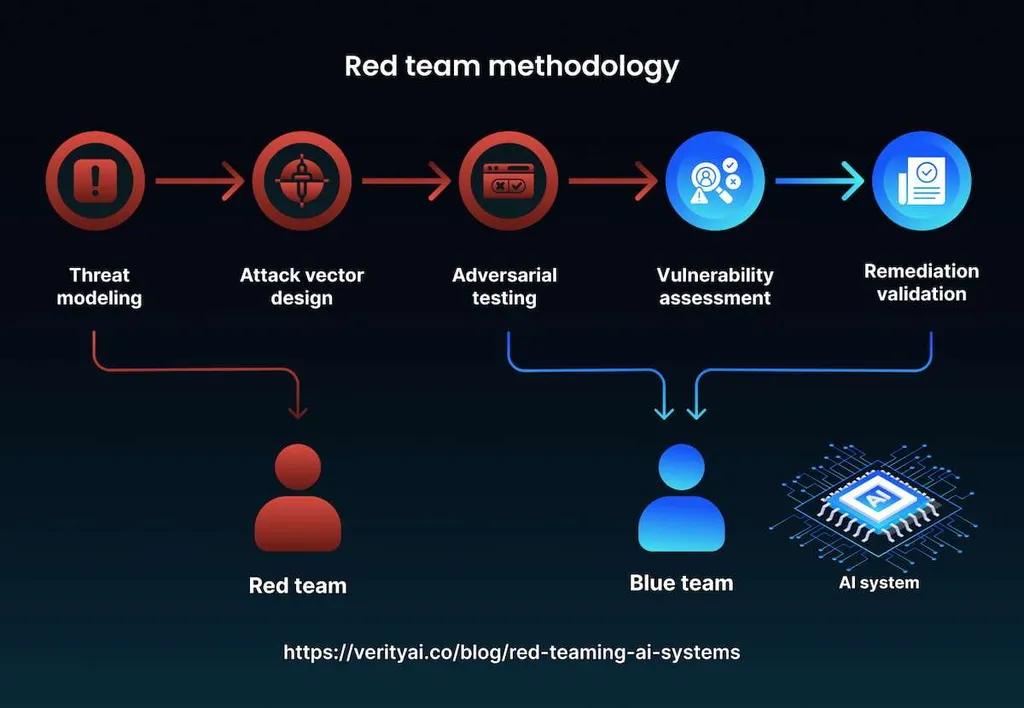

Here are the main stages of AI red teaming:

- Simulating adversarial attacks;

- Discovering points of failure;

- Performing a comprehensive threat assessment.

Unlike AppSec testing, this innovative approach fully recreates the dynamics of everyday situations. When AI becomes an integral part of complex environments in heavily regulated industries, it is vital to perform adversarial AI assessment to uphold the integrity of sensitive details. The practice is often used in healthcare, high-tech, and other sectors. It facilitates discovering exploitabilities compromising critical infrastructure and dealing with challenges distorting AI-generated outputs.

Primary Use Cases of AI Red Teaming

It might be impossible to enhance the performance of AI without thoroughly testing it in deployment scenarios. AI technology becomes advanced with every passing year, but many enterprises fail to leverage the full potential of such solutions. Security risks, limited transparency, and low accountability are the key factors hindering the adoption of AI. However, AI RT may help organizations to elevate their profiles and enhance responses generated by AI tools. Below, we have briefly outlined the main areas where this approach is deployed to improve testing efficiency.

Risk Detection

Basic and complex AI systems have weaknesses that often remain hidden during the early stages of their deployment. However, they inevitably occur when workflows and tasks become more complicated. When an organization builds a custom LLM, it should deploy advanced methods to discover deficiencies. Using a proactive approach, it can unveil hidden problems and discover how to mitigate risks before malicious actors try to exploit them.

Resilience Improvement

When hackers target specific AI systems, they can significantly disrupt their performance, resulting in substantial financial losses. AI reliability evaluation improves the capability of AI products to fortify themselves against different types of cyberattacks, including data poisoning and unauthorized exploitation of a system’s resources. By simulating threats, companies upgrade the protection of models and make them more capable of operating in changing environments and dealing with hostile users.

Regulatory Compliance

Many industries follow strict security standards and ethical guidelines. As these rules evolve constantly, keeping track of them requires building algorithmic systems capable of tracking these changes and adjusting their performance accordingly. Attack scenario simulation facilitates discovering whether AI products adhere to regulations. It permits companies to foster trust and achieve a solid reputation.

Bias and Data Privacy Testing

Discovering biases is daunting without deploying efficient testing strategies. Testers must use the newest strategies to unveil biased information within the data sets used during the training and eliminate the possibility of unfair outcomes to make their solutions more inclusive. As AI tools are often used to process sensitive data, testers should check how these apps handle confidential information and what data storage methods they use.

Performance Under Challenging Conditions

When demand for AI services increases drastically, the performance of AI tools may significantly deteriorate. Some systems fail to handle high volumes of data or process contradictory inputs. Testing enables businesses to ensure their AI applications will continue to function during crises. Besides, it prevents users from leveraging misleading inputs to produce harmful content. AI misuse evaluation is a customizable testing model. It works best in situations when an organization needs to come up with a more nuanced testing strategy. In finance, testing teams may focus on fraud or fund misuse cases. In healthcare, the goal is to identify issues resulting in life-critical mistakes.

Integration Issues

Implementing AI requires considering how it will function in conjunction with legacy systems. Testing allows one to examine existing connection points and secure them against unauthorized access. Testers focus on integration with APIs, external data storage, and external apps to discover any problems endangering the whole framework. Adversarial simulation may involve AML defense testing. Its goal is to understand whether malicious users can manipulate an algorithm.

Harm scenario evaluation has demonstrated impressive results in different situations. The method facilitates discovering emerging issues and unveiling industry-specific threats. By learning how to proactively detect and resolve issues, companies can build more resilient and functional AI tools.

Benefits of AI Red Teaming

Due to the variety of applications, AI red teaming has many undisputed advantages. Ventures with all budgets can use the practice to avoid unnecessary expenses associated with data leaks. Below, we have described the main reasons to deploy it:

- Proactive risk discovery and defensive measures

- Upgrading harm minimization mechanisms

- Better operational efficiency and consistent performance

- Diminished policy violation hazards

- Elimination of discriminatory outputs

- Efficient stress-testing

- Assessment of threats related to interactions with users

- Increased accountability

Due to these factors, the incorporation of AI RT methods is becoming widespread. The methodology is expected to dominate the market and lead to the faster deployment of AI in various apps. It will become possible to build frameworks designed to facilitate making informed decisions based on verified facts and handle information without compromising important details.

Like other universally deployed artificial intelligence security methods, RT will change with the emergence of more sophisticated threats. Modern platforms are better equipped to counter attacks, but they still might be liable to them. Innovative ethical hacking tools will be used by entities of various sizes to configure the functioning of LLMs.

The emergence of GANs results in large-scale AI app manipulation. RT enables modern ventures to counter the threat. Companies from defense and other industries focused on the use of private or classified data will utilize new, optimized methods to perform impartial evaluations of their frameworks and security mechanisms. Their main focus is on increasing accountability and building trust. This is where hiring third-party cybersecurity experts becomes crucial. However, due to the shortage of talent, many enterprises prefer to outsource testing tasks to reputable providers. Such teams perform continuous testing and provide evidence of current and anticipated AI hazards.

How to Perform AI Red Teaming

Embracing this approach lets testers follow a well-structured procedure. It comprises several important steps and involves using efficient techniques designed to assess the resilience of AI apps. Be sure to select a suitable method, depending on your needs. You can use various approaches:

- Manual testing relies on the input of experts who create prompts and interact with AI to recreate hazardous conditions. Then, analysts assess the outcomes using certain criteria, including the severity of the problem and the efficacy of the adopted methodology. This approach enables teams to detect the most complex issues.

- Automated testing involves deploying AI algorithms and producing harmful inputs to simulate attacks at a larger scale. Developers use classifiers to assess outcomes against certain benchmarks. The shortcoming of the method lies in its insufficient efficiency when one needs to discover more innovative threats.

- The combined strategy involves using automated tools and incorporating a human element into the process. It facilitates the development of a more result-oriented testing framework. Teams create a set of prompts describing the conditions of potential attacks and then use AI to scale these issues into extensive datasets.

Each methodology has its unique strengths. Manual testing is the obvious choice in cases where one needs to identify and eliminate nuanced issues. Automated and hybrid approaches facilitate discovering problems at a scale.

Major companies quickly saw the gains derived from AI testing. OpenAI has developed a whole network designed to identify cases where their model can be used to generate harmful outputs. Instead of relying on content warnings, its developers focused on monitoring biases to augment a model’s capacity to detect harmful content without compromising the UX.

Microsoft began using a VLM to ensure that its tools do not produce illicit content. Its specialists were quick to realize that image inputs were more challenging to analyze, making them more susceptible to boundaries. Microsoft simulates system attacks to discover how some users may misuse AI tools.

Final Thoughts

AI red teaming enables ventures to significantly enhance their apps. Building an LLM from scratch and introducing proper protection mechanisms requires a lot of resources. This is why businesses increasingly rely on trustworthy companies with specialists equipped with relevant skillsets. It allows them to save valuable time and avoid launching costly training programs.

MetaDialog helps its clients build custom LLMs, integrate them with existing systems, and mitigate potential threats that could impact their performance. Our experts have extensive hands-on experience with advanced AI models and utilize powerful testing tools to ensure their uninterrupted functioning. Get in touch with our team and discover how to deploy AI systems safely and strengthen their performance.