Large Language Models (LLMs) drive sophisticated systems that efficiently handle repetitive tasks while generating contextually relevant responses to user queries. They are designed to analyze complex text input and make independent decisions. An LLM agent operates autonomously, which eliminates the need for human intervention. Such assistants can solve diverse tasks in a fraction of a second. In this guide, we will explore how companies deploy virtual helpers to optimize processes, streamline customer support (CS) routines, and save money.

What are LLM Agents?

Such AI systems are built to generate nuanced text replies. They have advanced reasoning capabilities, predict possible outcomes, remember client conversations, and fine-tune their tone of voice depending on the case. Even the simplest LLMs have retrieval augmented generation (RAG) systems, which allow them to find the necessary data in their databases. More complex AI agents understand rules, consider the context, and analyze questions in-depth to formulate an informed strategy.

Algorithmic bots masterfully split arduous tasks into several subtasks. When they need to solve an issue, they assess the information in databases. Then, they consider how similar problems were addressed in the past. Besides, they may look through the available data to try to predict future trends.

AI assistants have a deep understanding of human languages. They remember massive volumes of information and can perform a variety of actions in an autonomous mode. RAG and complex prompt engineering allow one to improve their performance and response accuracy. Even though LLM agents are pretty powerful, they may be prone to occasional hallucinations. Companies also have to safeguard client privacy when deploying such solutions.

How do LLM Agents Function?

AI assistants leverage the capabilities of an LLM to access necessary data, utilize memory, and complete tasks by applying reasoning and specialized tools. Let’s consider their key potential:

- Retrieval. Bots utilize RAG to obtain live data and produce replies that are compliant with current versions of the laws. Besides, they offer updates on the inventory status.

- Reasoning. LLM developers deploy advanced methods to ensure that their products understand complex prompts and choose the most appropriate course of action.

- Memory. A large language model agent remembers the history of recent chats and has access to storage containing structured data. They utilize their short- and long-term memory to adapt to each conversation’s scenario.

- Tool utilization. Bots work together with third-party systems to extract insights or carry out tasks. They are able to run and debug code, analyze a buyer’s purchases, and perform other actions falling within their competence area.

These capacities enable LLM bots to simplify complex workflows and handle issues they are tasked with in autonomous mode. An AI B2B sales assistant retrieves data from a CRM, analyzes past operations and interactions, schedules appointments, and provides updates on progress achieved. Similarly, IT agents access system logs to discover the factors causing an error, adopt an efficient troubleshooting strategy, and use scripts to fix an issue or create a ticket for a technical support team.

Main Features of an LLM Agent

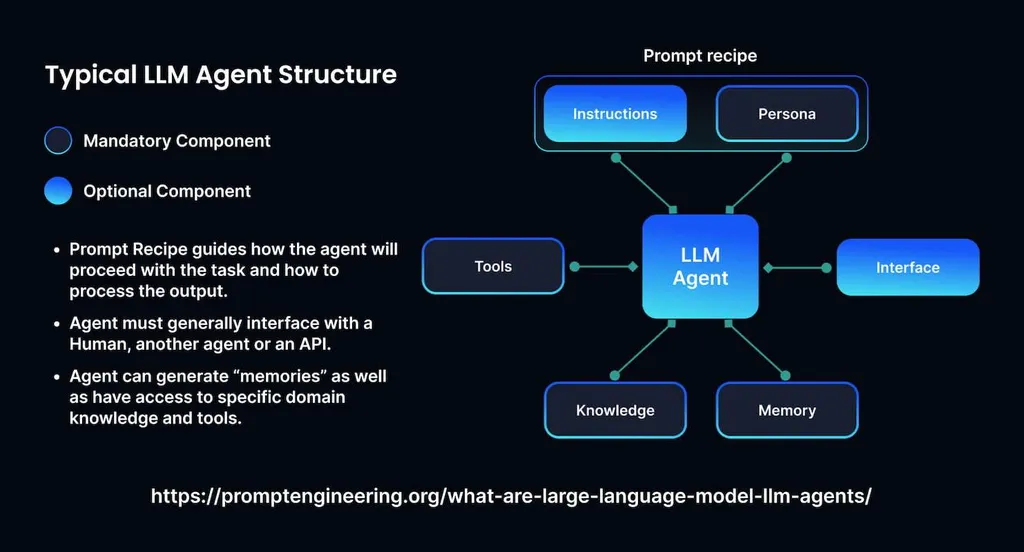

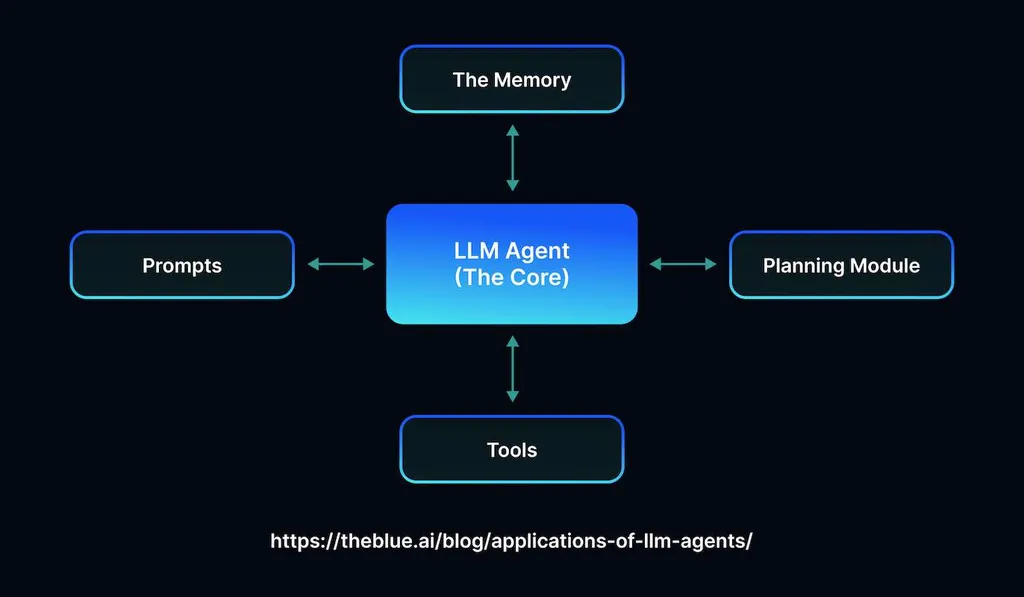

As AI technology becomes more advanced, AI bots gain new capacities. Modern products have four components:

- Language model. It’s the core component of each chatbot. The more powerful it is, the better performance a digital helper shows. Such algorithms are trained on vast amounts of data. It allows them to interpret messages within a particular context, detect repeating patterns, and generate comprehensive responses. LLMs gain improved contextual awareness after processing large volumes of data. They learn how to handle problems on various topics and provide in-depth replies.

- Memory. AI helpers remember past conversations, client preferences, and topics. It allows them to maintain coherent dialogues and engage in natural communication.

- Tool use. Bots work seamlessly with external apps, APIs, and databases. They call APIs, fetch live data, schedule appointments, and interact with various systems.

- Planning. LLM tools handle complicated tasks by dividing them into a series of simple steps. They can quickly develop a plan without feedback or spend more time refining its performance after analyzing the results of their actions.

The most advanced systems progress steadily toward an answer and can solve challenging requests.

Types of LLM Agents

Companies seek to improve their performance and optimize operations by deploying various AI tools. Below, we have briefly outlined the main varieties of AI helpers available today.

- Conversational bots. These solutions are often used in customer support, allowing businesses to serve clients faster and reduce response times while increasing satisfaction levels. Besides, they are suitable for lead generation. Such agents maintain natural-sounding conversations and provide information without delays. They generate in-depth replies to queries, offer relevant recommendations, and assist users with solving various challenges. Such agents are increasingly often used in eCommerce, healthcare, and other industries where strengthening client relations is crucial for an organization’s success.

- Task-oriented bots. AI-based assistants communicate with users to clarify their needs, recognize possible signs of dissatisfaction, and help them solve the issues they face. They help companies simplify routines better. These bots are often used in HR and other sectors where customers need to get help with specific tasks.

- Content-generation solutions. Bots with creative capabilities create images, audio, and text in different styles. They understand a user’s preferences and know how to generate engaging content that appeals to the target audience.

- Enterprise-level AI tools. These bots streamline communications, help humans coordinate processes, and simplify team cooperation. Collaborative agents manage projects, produce mistake-free reports, and facilitate decision-making.

Each solution has specific applications. When choosing between them, a venture needs to comprehend its goals and identify areas for improvement.

Use Cases and Applications

Ventures utilize large language model applications across areas where they need to process large datasets quickly and respond in natural language. These apps are the best fit for answering queries, providing assistance and recommendations, optimizing procedures, and interpreting text. They make marketing campaigns successful, analyze data, ensure compliance, provide legal support and healthcare assistance, monitor financial transactions, and handle other tasks.

There are several areas where LLM-driven bots have demonstrated high efficiency:

- Customer support. Most companies prefer to utilize LLM assistants to offer top-quality support and improve client experience. Reputable providers like MetaDialog develop enterprise-level custom LLMs, capable of automating 81% of responses and improving CS team productivity by 5 times. The company develops bots that reduce the average resolution time to 20 seconds. LLM bots answer common questions in real time, troubleshoot problems, and provide 24/7 assistance.

- Sales. AI bots excel at lead generation. They choose the most promising leads and maintain engaging conversations. It permits them to collect important information, send relevant follow-up messages, provide personalized recommendations, and offer comprehensive information about products.

- HR and IT processes. AI assistants also answer questions asked by team members. They help businesses optimize internal operations and save time spent on routine tasks that include communication between teams. In HR, AI agents answer questions about benefits, a company’s policies, payroll, and other issues that might interest employees. In IT, they make it easier to troubleshoot technical problems and automate simple processes like setting up an account. It enables IT professionals to focus on high-priority issues instead of being distracted by routine tasks.

As the technology improves, new use cases are expected to appear. AI can significantly simplify processes. While LLMs might be expensive to build, train, and integrate with existing systems, they save a company many valuable resources in the long term.

Augmenting LLM’s Performance

Enterprises want to use custom LLMs trained on extensive sets of high-quality data. Such models provide personalized responses and modify their performance depending on a user’s needs. Businesses use custom LLMs to avoid generic replies and strengthen their relationship with clients.

Here are the main ways to tweak the quality of responses generated by AI bots:

- RAG. When a user asks a chatbot to find specific information about a product listed on an e-commerce platform, update a shipping status, or recommend an item that meets particular criteria, the AI assistant retrieves data from its knowledge databases. Such models are pretty simple and do not require frequent updates or performance adjustment.

- Fine-tuning. One can train an LLM by providing it with specific examples that can help it solve challenging tasks. Companies may use transcriptions of their most successful sales calls to show a bot how to choose the best conversation strategy. Experienced engineers can fine-tune custom LLMs with the help of APIs. While the practice is costly, such models are easier to maintain. However, this approach is hardly suitable for situations when one needs to improve an LLM’s performance in real time.

- N-shot learning. Those who do not have a lot of time to spend can improve the quality of the provided replies by providing examples via an LLM API call. It’s enough to use one detailed example to improve the quality of a model’s responses significantly. When more than one example is given, it’s called n-shot. It does not fine-tune an LLM’s performance. However, one should be ready to add these examples whenever they write prompts. It makes the method quite cumbersome. Fine-tuning is more time-consuming, but it allows one to focus on repetition.

- Prompt engineering. There are many efficient techniques, including chain-of-thought, that require an LLM to voice its reasoning before offering an answer.

Each strategy allows engineers to get more accurate responses containing relevant information. However, these methods are associated with increased token usage. It takes time to get responses to complex queries. Besides, the response cost may be too high.

How to Build an LLM Agent

Before creating a custom LLM agent, you should consider its goals, budget, and application area. Follow these steps to build a solution for your needs.

- Set objectives. Consider the result you want to achieve. Depending on your desired outcomes, build an AI agent capable of solving a specific set of tasks. It will allow you to grasp what its area of expertise should be and how to configure it to improve its efficiency.

- Choose a suitable provider. Find a platform that specializes in AI products and can customize them to match your requirements. Services like MetaDialog integrate their solutions with existing software. Besides, such companies build autonomous AI-based agents that are easy to use. The platform of your choice should have expertise in the LLMs you want to build and help its clients with integration and maintenance.

- Adjust the LLM. You can either use a pre-built model or fine-tune its performance to help it perform special tasks flawlessly.

- Integrate an LLM with CRMs, APIs, and databases. It will enable your digital agent to access data in real time.

- Test the bot using the tools available on the AI platform. Be sure to configure parameters, experiment with different prompts, and implement various strategies to see which of them allows you to reach your goals faster. This will help the AI bot solve tasks more efficiently in real-life situations.

- Deploy the bot and track its performance. Use professional monitoring tools to analyze an LLM agent’s behavior and success rates.

The strategy will help you collect the insights you may need to use to augment the performance of your bot. Companies that put off integrating algorithmic bots with their platforms may lose their competitive advantage and relevance in the market. Digital assistants are essential tools for e-commerce businesses, as they allow one to process increased volumes of orders without delays.

Challenges Associated with LLM Agents

Even though chatbots have demonstrated impressive efficiency, one may face several issues when trying to use them. Here are the main obstacles preventing their widespread adoption:

- Limited understanding of the context. LLM agents keep only a small amount of data in their memory at a time. They may disregard an essential piece of instructions or fail to remember some details mentioned by a customer in a previous conversation. Even though vector stores and other methods help developers ensure that AI models will be able to access more data, this issue is challenging to solve entirely.

- Problems with long-term planning. LLM agents may have difficulties when creating plans for longer periods. They find it especially challenging to adjust when something unexpected happens. It makes them less efficient than humans in the same situation.

- Inconsistent output quality. Chatbots use natural language to communicate with digital products and databases. However, the outputs they generate are not always accurate. Users complain about formatting issues or mention that AI bots occasionally disregard instructions. Due to this, users have to spend more time clarifying the prompts.

- Adjusting performance to perform specific roles. LLM bots often struggle with uncommon tasks and fail to distinguish between diverse human values.

- Prompt issues. Users have to write clear and detailed prompts to avoid errors. Even slight inaccuracies can result in significant mistakes.

- Cost efficiency. Running powerful algorithmic bots requires a lot of resources. They need to analyze a lot of data within a short timeframe. Besides, their performance may slow down if there are mistakes in the prompts due to a lack of information.

Solving these issues helps firms make their LLM agents more reliable and expand the area of their potential applications. Many ventures deploy a multi-agent framework to use several AI bots when working on complex tasks. These digital assistants share information and simplify convoluted processes.

Final Thoughts

An LLM agent is a convenient solution for businesses that want to simplify their operations and expedite complex workflows. It can collaborate with other bots to respond to challenging queries and handle complex scenarios autonomously. However, building such bots from scratch takes a lot of time and valuable resources. This is why many firms outsource these tasks to reputable providers. Metadialog specializes in building top-grade LLMs and customizing them to meet clients’ preferences and unique needs. We help our customers to simplify CS processes and other tasks that can be fully automated. Our experts offer personalized solutions for businesses of all sizes that want to use AI to achieve sustainable progress. Get in touch with our team to build an advanced framework and use AI to simplify your routines.