Artificial Intelligence (AI) technology continues to evolve, allowing users to experiment with different types of data inputs to produce high-accuracy outputs. Finding an efficient solution for handling privacy concerns and eliminating biases lets enterprises increase the transparency of their processes and make the most out of algorithmic tools. In this guide, we will explore how systems with multimodal AI capabilities facilitate decision-making, allowing businesses streamline workflows across various industries.

What is Multimodal AI?

The term refers to the ability of AI solutions to work with different modalities, or types of data. Advanced AI systems use all sorts of sources to come to independent conclusions and make predictions. This approach empowers them to generate comprehensive outputs relevant to the context.

Unlike unimodal systems, advanced solutions collect data from various platforms and handle files in different formats. It enables them to understand each query better and makes their conclusions more human-like. Such products have a more nuanced understanding of any topic and perform data fusion.

Well-developed multimodal capabilities enable autonomous systems respond to text prompts, voice inputs, and visual cues. The technology resulted in the development of powerful visual assistants, trained to handle every user request within a fraction of a second.

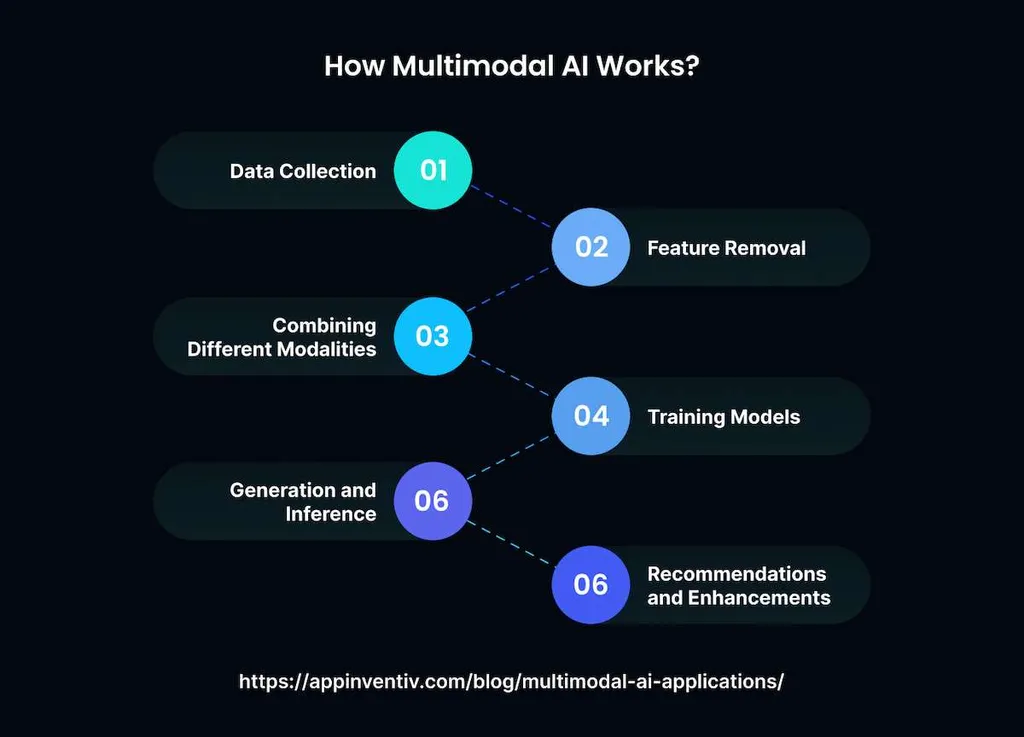

How Does Multimodal AI Work?

Systems powered by multimodal AI combine data from several sources to form an in-depth understanding of every particular situation. The process of concluding comprises three main stages.

- Data processing specific to a certain modality. The AI deploys dedicated models to analyze each type of input. It may rely on NLP to handle inputs and convert them to vectors.

- Fusion. After examining insights from certain models, the AI combines the extracted data and uses neural networks to discover patterns and connections across queries of different kinds.

- Output. The AI system produces a comprehensive reply to a query, generates the requested content, or performs other requests after analyzing several inputs. Unlike single-modality solutions, multimodal AI has a better grasp of every issue, as they put it into the right context.

AI agents with such capabilities are fully equipped to solve any difficult task they may face without escalating it to human employees.

Benefits of a Multimodal Approach

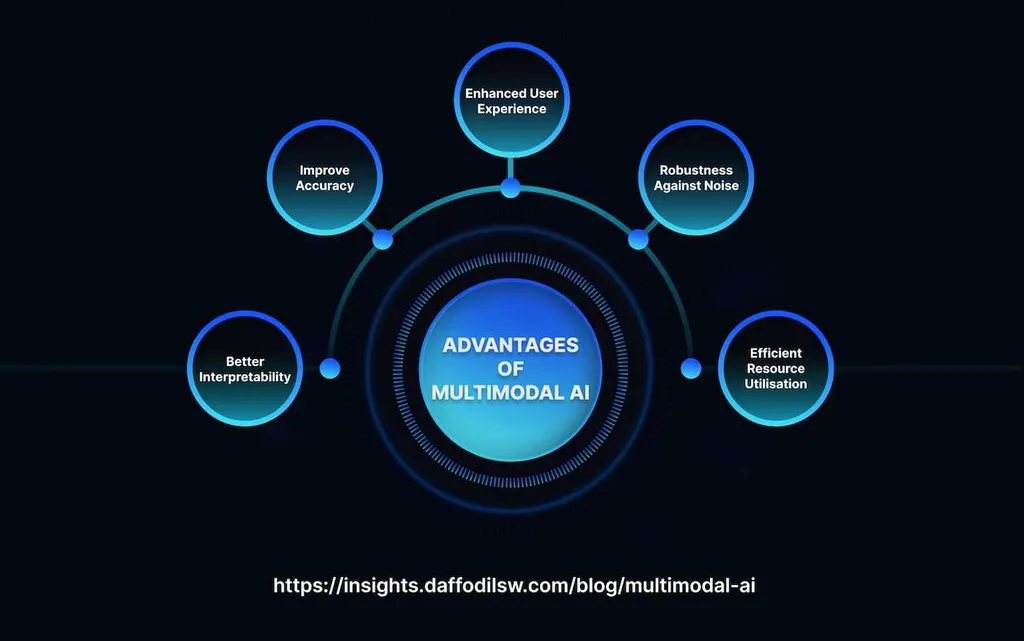

Newest AI tools search the web to find reliable data stored on authoritative platforms. The ability to analyze various inputs enhances their performance. Multimodal AI has several notable advantages:

- Accurate replies. AI bots cross-reference data from available sources to gain a deeper understanding of a topic. It lets self-driving vehicles analyze data from cameras and use distance estimation tools to have a full grasp of their surroundings.

- Augmented contextual understanding. Model AI analyzes words, the tone of voice, and the images attached to a message to interpret a user’s query.

- Human-like communication. Multimodality facilitates maintaining natural interactions and lets AI agents analyze verbal queries and facial expressions.

Multimodal AI detects patterns better and combines data streams from different sources to deliver reliable outputs. Multimodal AI models handle convoluted challenges. They can be taught to diagnose a patient’s medical condition by analyzing medical imaging. Their advanced adaptability and the capacity to learn by analyzing information extracted from across different domains improve their effectiveness when it comes to solving challenging issues. Such models also have well-developed content creation capacities and can generate highly unique outputs.

Challenges of Multimodal AI

Improved contextual understanding permits AI bots to solve problems of different complexity. However, enterprises that seek to integrate such solutions with legacy systems should be aware of potential issues:

- Collecting and organizing information may take a while. Businesses should use high-quality datasets to train AI. This process may be quite time-consuming.

- Fusion-related problems. Different sorts of sources may be poorly aligned, making it more difficult to combine the extracted insights.

- Translation. Some AI systems may fail to produce accurate outputs when trying to translate content across modalities.

- Ethical issues. AI models may produce biased outputs. Besides, when sensitive data is used during training, ventures need to be aware of data governance and security concerns.

Firms must discover how to deal with these problems to increase trust in their AI solutions and maintain transparency.

Real-World Use Cases

By deploying powerful AI agents, businesses can process data faster, reduce resolution times, and automate processes. Apps with multimodal AI capabilities have transformed many industries:

- Healthcare. AI bots analyze medical records and MPI scans, translate patients’ histories, and provide accurate diagnoses.

- Self-driving cars. AI-powered vehicles process data collected by sensors, cameras, and radars to navigate safely.

- Customer service. Pro-grade AI assistants analyze the entire conversation history with a client across multiple communication channels, identify the problems they face, and provide tailored recommendations. They are available 24/7, which makes them even more reliable.

- Content creation and advanced search. Users generate media files based on text input and search for the information they need using prompts in various formats.

As researchers keep improving AI architectures and fine-tuning training methods, new applications of multimodal AI are expected to emerge.

Future Developments in Multimodal AI

With the discovery of new ways of processing various data types within a single framework, companies will deploy multimodal AI more often. Here are some trends that can shape the way businesses operate and process insights:

- Real-time processing capabilities will make AI tools more efficient. AI systems will analyze sensor data more quickly to make lightning-fast decisions.

- Augmented cross-modal fusion and a deep learning model architecture will streamline the alignment of text, images, and other inputs.

- Unified models taught interpreting and produce multimodal content will be deployed more widely.

- Open-source tools will streamline collaboration among experts in the field and enable researchers to discover new applications of AI.

- Improvements in training data will augment the performance of AI models.

The performance of AI products will be optimized so they consume fewer resources and learn to generate precise replies across all types of input, including those that are insufficiently detailed.

Final Thoughts

When AI systems start combining data with greater accuracy, they produce higher-quality outputs. Building enterprise-level AI models from scratch can be costly and extremely time-consuming. This is why many businesses outsource this task to third-party professionals. At MetaDialog, we have an experienced team of AI engineers who develop powerful LLMs. Our AI-powered support platform helps our clients improve the productivity of their CS teams by 5 times and reduce the average resolution time to 20 seconds. Get in touch with our team and discover how to deploy multimodal AI to solve tasks in real-world scenarios with ease.