Retrieval-augmented generation (RAG) is an AI framework designed to provide large language models (LLMs) with insights collected from third-party knowledge bases. LLMs are known for their accuracy issues, which prevent their widespread adoption. They may fail to generate relevant replies unless their training data is well-organized and of the highest quality. In this guide, we will explore how RAG enables AI models to improve their responses by supplying them with information retrieved from authoritative sources.

Why Use RAG in AI?

Artificial Intelligence (AI) technology uses LLM to make chatbots more powerful and increase the efficiency of apps based on the usage of natural language processing tools. It enables businesses to streamline their customer support routines, automate processes, reduce wait times, and ensure higher client satisfaction. However, semantic search has its limitations. As a result, LLMs often produce inconsistent outputs. Besides, they rely on static data that may quickly become irrelevant. Other documented issues associated with LLMs include:

- Providing false information when it’s impossible to find an answer in the knowledge base.

- Using outdated facts to produce a reply in a current context.

- Relying on non-authoritative sources.

- Offering inaccurate outputs and misusing terminology.

The usage of RAG allows businesses to address these challenges. The framework allows LLMs to access insights stored in specified knowledge bases. It makes it easier to control the quality of outputs.

Companies implement RAG to increase the efficiency of their LLMs and ensure they have full access to current data sources. This framework facilitates checking the information used by a model and verifying its authenticity. This approach enables the optimization and augmentation of outputs produced by an LLM. It facilitates access to references beyond training datasets, enabling AI bots to generate relevant replies to queries, translate text with high accuracy, and solve questions.

RAG augments LLMs’ capabilities, making their usage even more result-yielding in specific domains. It allows enterprises to build and use extensive internal knowledge bases without spending a lot of valuable resources on retraining their models. RAG permits fine-tuning LLM replies and ensures their accuracy in all sorts of contexts.

Benefits of Retrieval-Augmented Generation

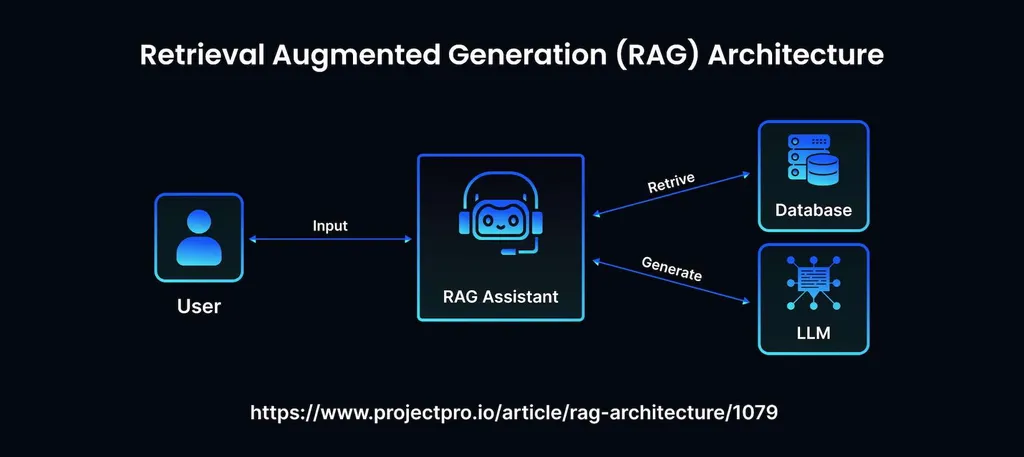

Organizations deploy AI systems to automate routines, simplify workflows, and save money. RAG enables them to enhance their chatbot’s performance and decrease the average response time. RAG architecture includes a retrieval system and an LLM. There are several notable upsides to implementing this framework.

- Affordable implementation. Companies utilize API-accessible foundation models (FM) trained on well-organized datasets. Using RAG is cheaper than retraining FMs. It enables ventures to make new information accessible to their LLMs without spending significant resources on fine-tuning a model’s performance.

- Up-to-date information. Even if one trains an LLM using a set of current data, it will still inevitably get outdated as time goes by. RAG permits models to access current research, statistical reports, or current news. It enables AI tools to retrieve insights from social media, news platforms, and other sources where information gets updated regularly.

- Trustworthy responses. RAG provides outputs with citations and references to reputable sources. Users can double-check the insights and ask for clarification. It fosters confidence in the replies written by AI bots.

- Improved oversight. With RAG, it becomes easier to upgrade AI applications. Developers use the framework to control the resources an LLM has access to and adjust the functioning of generative AI models. Enterprises use RAG to introduce authorization guidelines and streamline troubleshooting.

RAG enables businesses to utilize cost-effective solutions to resolve common issues and integrate with a list of third-party platforms that can be accessed through a model. It lets developers build custom LLM-based systems and apply them in different contexts.

How Does Retrieval-Augmented Generation Work?

RAG adds an information retrieval element to LLMs to analyze user input and retrieve information from external sites to generate a reply. It enables AI tools to access more extensive sets of data and supply users with fact-based insights. LLM utilizes newly acquired knowledge and training data to generate improved responses. Here are the main stages of the process:

- Creating external data outside the original training knowledge bases. This information can be accessed from APIs, repositories, and other sources. It may be stored in various formats, including documents and records. The LLM embedding technique facilitates converting this data into a numerical format and saving it to a vector database. It allows one to build a knowledge library that can be searched by AI tools.

- Retrieving information. After a system converts a user input into a vector representation, it searches for relevant insights in vector databases. Many organizations already deploy chatbots trained to answer HR questions. If an employee is interested in annual leave, digital assistants will supply them with official policy documents and their previous leave records. As a result, a user will receive a highly specific response.

- Enhancing LLM prompts. RAG models are designed to improve inputs by analyzing additional facts to understand the context better, grasp a user’s intent, and generate detailed replies.

- Updating external sources. An organization can keep external knowledge bases relevant by updating them in automatic mode. Companies deploy different change management practices to conduct asynchronous updates.

The simplicity of the framework explains its quick adoption by AI system developers. Companies utilize RAG with LLMs to allocate resources more effectively and strengthen their relations with clients.

RAG Use Cases

The new framework facilitates accessing remote data repositories and increasing the area of potential applications. An AI model connected to a medical platform will help healthcare professionals assist patients more efficiently. Financial analysts can benefit from using chatbots with access to recent market updates. Most companies can streamline access to their technical manual and policy guides and deploy powerful LLMs with access to knowledge bases. These sources can help them improve the quality of their CS services, boost the effectiveness and productivity of the development teams, and simplify employee onboarding and training.

Google, IBM, AWS, Microsoft, and other top companies have already adopted RAG AI solutions. Customers often use ambiguous wording, which makes it challenging to interpret their queries correctly. Due to this, businesses started to improve the capabilities of their LLMs. RAG ensures that an AI model won’t try to make up an answer when it fails to access the source it needs.

An LLM requires additional training to be able to stop from generating false replies when it can’t answer based on the facts they have. A basic chatbot that does not use RAG may give wrong instructions or fail to consult a user on policies. RAG ensures that a digital assistant will be able to generate replies based on the latest info.

Here are the most notable applications of RAG:

- Delivery assistance chatbots. DoorDash implemented a RAG bot that streamlines food delivery.

- Tech support bots. LinkedIn started to use RAG to create knowledge graphs based on the issues that occurred in the past. When a person asks a question, the chatbot retrieves relevant sub-graphs to produce quick responses.

- Company policies chatbots. Bell deploys RAG to simplify knowledge management and make it easy for employees to access its policies.

- AI faculty assistants. Harvard Business School has implemented a chatbot to help students prepare for its entrepreneurship course. It allows them to learn more about the complex issues, discover information about case studies, and perform other tasks.

Besides, companies utilize RAG to generate short video summaries and create links to important moments. Such assistants also facilitate creating fraud reports and collecting data for investigation.

Final Thoughts

Retrieval-augmented generation is invaluable for grounding LLMs on the most recent information that is easy to verify. The implementation of this framework allows ventures to save costs on retraining. Many companies lack the time and resources necessary for developing an advanced LLM. This is why they outsource this task to reputable service providers like MetaDialog. We have a team of experts who have years of experience in building LLMs. Our specialists develop and deploy bots designed to use RAG to retrieve live data. Get in touch with our team and discover how to improve your CS team productivity by implementing an RAG AI chatbot.