In the fast-growing generative artificial intelligence (genAI) industry, the size of language models is often seen as the basis of their potential. Large language models (LLMs), including GPT-4, dominate genAI, demonstrating significant potential for understanding and generating natural language. However, there have been significant changes recently. Small language models (SLMs), previously overshadowed by their larger counterparts, are becoming robust generative AI tools across various applications. This change challenges the long-held belief that more is better. This blog post will discuss SLMs and how they can be applied to your benefit.

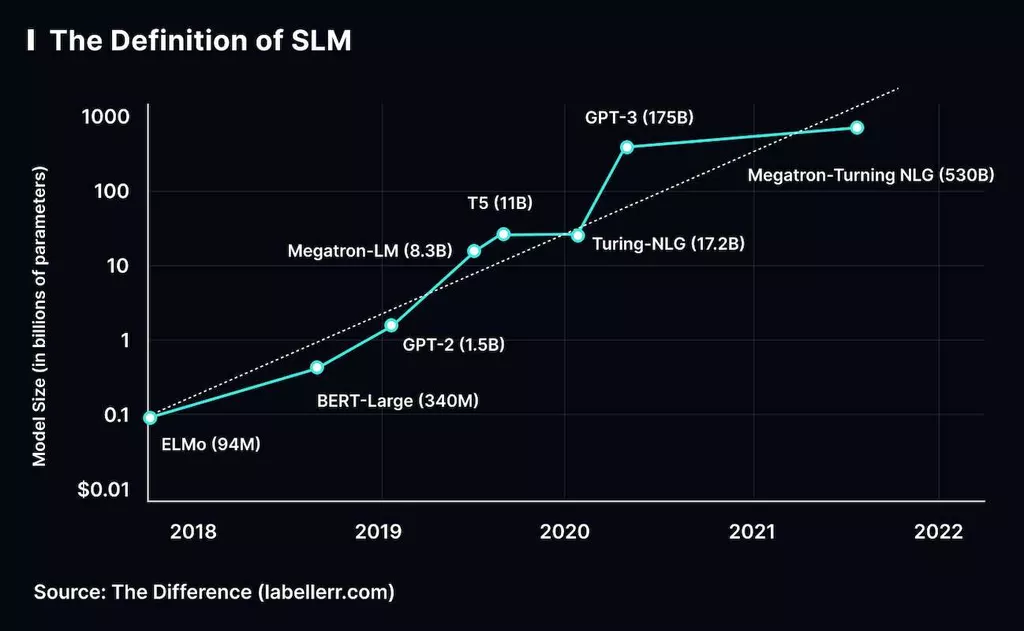

The Definition of SLM

The small language or edge model is an AI language model that utilizes a neural network to generate natural language content. The word «small» refers to the number of model parameters, the size of its neural structure, and the data used in training. Parameters are numeric values that define how the system interacts with input insights and generates output information. The more parameters, the more sophisticated the structure is, requiring more data and computational power.

Most researchers agree that language models with fewer than 100 million parameters are small, although some experts reduce this figure to 10 or even 1 million. Note that the modern LLM parameter size is over 100 billion.

Operating Principles of Small Language Models

SLMs operate on the same principle as LLMs: they utilize neural networks to study the statistical patterns of a neural language based on a large amount of text data. A transformer is the most famous kind of neural system used in language modeling. It includes several layers of attention mechanisms, so the AI-backed structure can concentrate on different database elements. However, to reduce the size and maximize the effectiveness of SLM, experts use additional technologies:

- Knowledge distillation: this technique involves transferring insights from a reliable LLM to an SLM, which receives its critical potential in a simplified form.

- Pruning and quantization: the approach allows you to eliminate unnecessary elements of the system and decrease the accuracy of its weights, reducing its volume and requirements for datasets.

- Robust architectures: experts regularly adopt advanced architectures created for SLM, aiming to increase performance and efficiency.

The primary distinction between SLM and LLM is that SLMs are taught on a limited dataset, not large-scale repositories such as Wikipedia or Common Crawl. It provides a narrower scope of application and in-depth knowledge of a particular discipline.

Reasons for Implementing SLM

The growing interest in small language models is due to several key factors, including their efficiency, adoption costs, and improved customization capabilities. Let’s analyze each of these aspects separately.

Effectiveness

SLMs have fewer parameters, which means they are more computationally efficient than larger-scale structures, e.g., GPT models, in multiple aspects:

- They exhibit more incredible output speed and throughput because each input requires processing fewer parameters.

- They demand less memory and storage space due to their small size.

- A limited dataset is sufficient to teach SLM. As model size increases, so does the need for data.

As we can see, these SLMs are not only faster, but they also use resources more efficiently. It is especially true if we speak about applications where speed and resource efficiency are key.

Expenses

The implementation of LLMs involves the use of significant computing power for training and deployment. OpenAI spent tens of millions of dollars to create GPT-3 if you consider hardware and development expenditures. Today, many public LLMs are unprofitable due to high resource standards.

At the same time, edge models can be studied, deployed, and used on standard hardware available to any type of business without significant financial costs. Their efficient resource requirements create considerable potential in the edge computing industry, where they operate in standalone mode. In the short term, the list of SLM applications will expand.

Customization options

An essential benefit of SLMs compared to large structures is the ability to customize them. Although some LLMs, including ChatGPT, demonstrate versatility across different tasks, their potential is a trade-off to ensure a balance of performance across various sectors.

At the same time, SLMs can be easily adapted to narrower areas and particular apps. Such structures have a fast iteration cycle, which allows you to test the features of adapting models to multiply categories of datasets with the following techniques:

- Pre-training: SLM can be prepared to collaborate with a particular database.

- Fine-tuning: continuous learning process to optimize the end-task dataset.

- Hint-based learning: generating optimal hints for launching specialized software.

- Architecture modification: changing the model structure to perform specific types of work.

Working with LLMs like this requires a lot of time and effort. With the availability of SLMs, developers can quickly adapt them to their specific needs.

Primary Limitations of SLM

Although SLMs provide many benefits, they also pose some challenges. When implementing such structures, it is essential to remember the following limitations:

- Generalization problems: tiny models often have difficulty generalizing to complex issues that involve a clear interpretation of the context. A comprehensive review is necessary to ensure that systems operate effectively.

- Finding complexity trade-offs: selecting an edge model often involves trade-offs in terms of structural complexity. Professionals must balance the need for productivity with the complexity of the tasks at hand.

Because SLMs learn from less information, their databases are more limited than LLMs. They have a narrower understanding of language and context, which often results in less accurate and detailed answers than when utilizing larger structures.

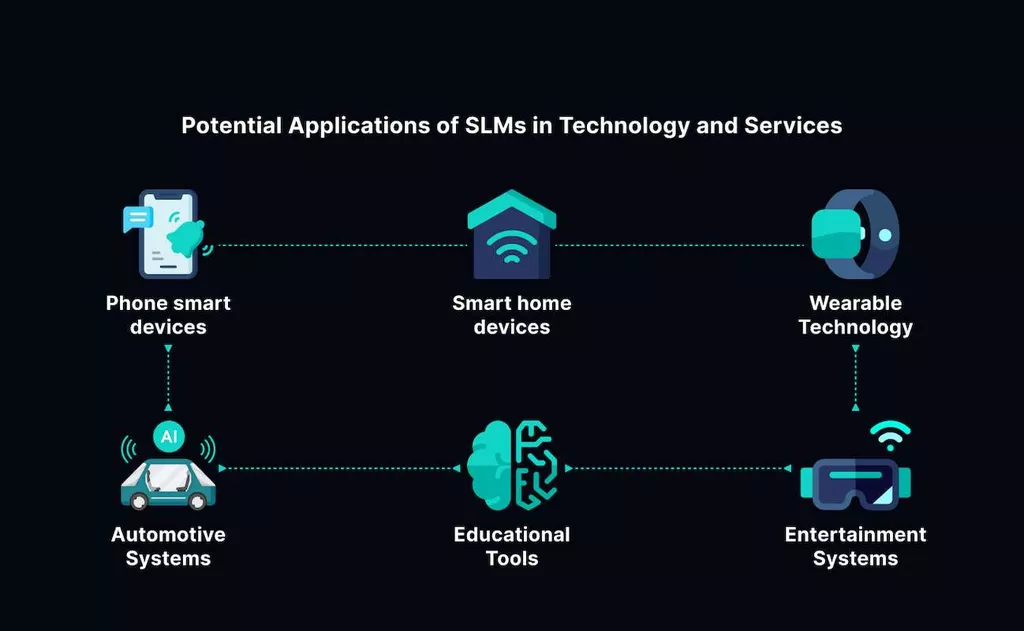

The Most Famous Use Cases of SLMs

Recent advances in SLM learning technologies contribute to their active dissemination. Let’s explore some exciting options to implement tiny structures in different areas of human life.

- Customer experience automation: small large language models are suitable for customer interactions due to their maximum flexibility and efficiency. Tiny models cope with everyday difficulties and customer requests, allowing specialists to focus on other tasks.

- Help with product development: by helping to generate ideas, test functionality, and gauge client demand, tiny structures play an essential role in creating digital products.

- Email automation: SLMs speed up online correspondence, help compose requests, automate responses, and make suggestions to improve the text. The ability to quickly and efficiently exchange online letters increases the efficiency of individual specialists and the business.

- Improve marketing and commercial activity: you can use SLMs to create personalized marketing materials, including promotional offers and email campaigns. It allows enterprises to maximize their advertising and commercial efforts.

SLMs allow companies to achieve significant results with minimal spending. They help you automate tasks, personalize customer interactions, and enable you to make intelligent decisions.

Actual Examples of Utilizing SLMs

Modern companies and developers are actively using edge models to benefit their applications. Let’s look at some of the most famous examples of implementing SLMs:

- Google: the corporation is implementing tiny models to ensure the correct operation of its search engine. Such structures accurately determine the semantics of search queries and produce the most accurate results.

- OpenAI: The company is adopting SLMs to improve its chatbot Bard, which can communicate on various topics and create exciting text content.

- Hugging Face: this organization offers tools for developers who want to work with SLMs. Specialists can use its library of pre-trained models to create their software.

Since SLMs are still in their developmental stage, there will be many more successful examples of their use in the future.

Final Words

SLMs represent a significant advance in the field of artificial intelligence. These are effective and versatile solutions that successfully compete with popular LLMs. Small language models are changing computing standards by cutting expenditures and simplifying architecture, confirming that size is not the main criterion determining productivity. Although limitations remain, e.g., difficulties in identifying context, active research in this industry will increase the effectiveness of tiny systems shortly.

If you also plan to adopt SLM into your corporate activities, we recommend consulting with MetaDialog experts. Book a demo on the site to get your efficient, custom language models that will grow your business.