Companies need to process a lot of information to create high-quality datasets and streamline the performance of their Large Language Models (LLMs). Transformer architecture allows businesses to develop powerful tools trained on extensive repositories. Such models became popular due to the support of parallel processing, reduced training time, and advanced self-attention mechanisms. In this guide, we will explore how businesses can use a transformer model (TM) and develop solutions capable of providing accurate context-relevant interpretations. We will consider the potential area of application for such tools and examine how they can help ventures achieve higher scalability.

What is a Transformer Model?

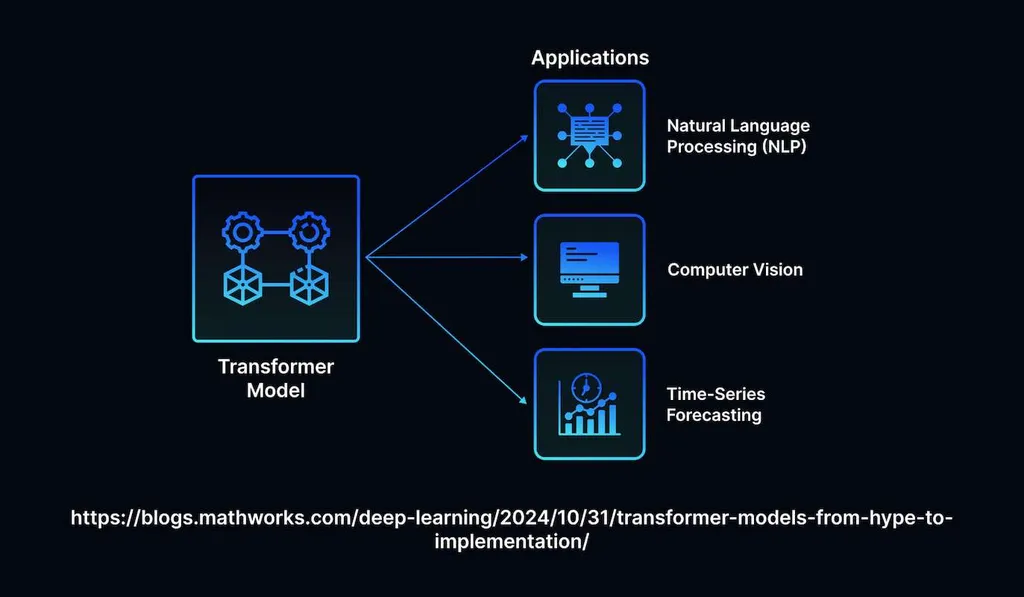

The term describes a type of neural network architecture (NNA) developed to handle longer sequences of data using self-attention mechanisms. These models analyze and correctly interpret long-range dependencies (LRD). It makes them superior to their predecessors and increases the area where they can be used. Transformers demonstrate better results in natural language processing (NLP). Besides, they are suitable for computer vision tasks.

Transformers are designed to perceive how the meaning of a sentence may change due to the subtle difference in wording. The NNA was built to adjust an input and produce natural-sounding output in a specific context. Tools based on the architecture perceive the links between different components of each sequence to perform speech recognition, translate texts, and analyze other types of input.

Why Use Transformers?

The first AI models were based on deep learning technology and utilized NLP to understand inputs in natural languages better. In most cases, they guessed the continuation of a sequence based on the available information. The autocomplete tool finishes a user’s sentence typed on a smartphone in a similar way. While such suggestions may be helpful, they are not always relevant to a user’s intent.

Machine learning (ML) models used the same principle and established the links between word combinations, trying to guess the continuation of a phrase. However, these approaches did not allow AI tools to preserve their understanding of context when the length of input exceeded specific limits. Early models struggled even with simple paragraphs. It was impossible for them to write the first and the last sentence of a paragraph relevant to the same situation. They did not remember the necessary connections, which made their outputs irrelevant to the questions asked.

Transformers represented an innovative approach to processing long-range dependencies in textual inputs. Here are the most notable upsides of adopting the technology:

- Large-scale LLMs. The approach resulted in the development of enterprise-level solutions. Parallel computation reduced training times and expedited processing. It led to the development of GRP and BERT LLMs designed to understand advanced language representations. They support billions of parameters and perceive a variety of nuances when analyzing sentences.

- Streamlined customization. Transformers facilitate retrieval augmented generation (RAG) and allow companies to use transfer learning more effectively. These approaches facilitate configuring the performance of existing models and customizing them depending on an industry’s needs. After a model is trained on an extensive, general-purpose dataset, it can be fine-tuned with the help of small datasets with industry-relevant knowledge. The practice simplifies using complex models and dealing with the limitations of traditional models. It enables the creation of transformers that deliver consistent performance across various industries.

- Multimodal AI systems. Transformer models make it easier to use AI tools to solve tasks that require multiple advanced data sets. DALL-E demonstrates how it can be deployed to generate pictures based on text inputs. Developers utilize transformers to construct AI applications that can process various types of inputs and tackle tasks more creatively.

- AI research. Transformers changed the way ML is used. They enabled the development of innovative architectures and powerful apps designed to solve convoluted issues. They facilitate understanding various inputs and generating comprehensive outputs in human language.

Such models made it possible to develop apps that augment customer experience and analyze market data to unveil result-yielding business opportunities.

Main Use Cases of Transformers

Firms train transformer models on all sorts of sequential data, including input in human languages, music, programming languages, and many more. Below, we have briefly outlined the most notable applications of TMs.

- NLP. Transformers make it easier for machines to grasp the meaning of the sentences in human language, recognize intent, and interpret inputs without any inaccuracies. They analyze multi-page documents and provide detailed summaries. Besides, these tools are perfect for content generation in different industries and niches.

- Machine translation. Modern apps rely on transformers to provide simultaneous translation services with a high level of fluency. Unlike other technologies, this architecture facilitates generating professional-quality translations.

- DNA sequence analysis. Companies increasingly often utilize TM to estimate the potential impact of genetic mutations, interpret repeating genetic patterns, and discover DNA regions associated with specific diseases. The capabilities of this architecture make transformers particularly useful for providing personalized healthcare services. Transformers allow professionals to discover efficient treatments using genetic data.

- Protein structure analysis. Researchers use TMs to model extended chains of amino acids. It helps them understand how convoluted protein structures are organized. The technology facilitates drug discovery and the interpretation of biological data. Scientists also deploy transformers to analyze amino acid sequences and predict how proteins will be structured.

As the AI technology continues to develop, companies will come up with new applications of transformers.

How do Transformers Work?

Neural networks comprise several layers of nodes connected to each other. They are closely interlinked and function like the human brain. It allows them to handle complex tasks. They rely on an encoder/decoder pattern to analyze input sequences, including text, and produce their mathematical representation. The decoder uses the summarized input to produce an output. Each part of the data is processed sequentially. It means that an AI tool may forget and omit some details if a sequence is too long.

Transformer models rely on a self-attention mechanism to analyze different elements of a sequence simultaneously and detect the most important ones. This method allows a TM to focus on the most meaningful pieces of data and generate outputs that are more relevant to the context. Due to their augmented predicting capability, transformers produce better results and can be trained on larger datasets. TMs can process longer documents and remember information in them to generate replies that fully answer a user’s query.

Different Types of Transformer Models

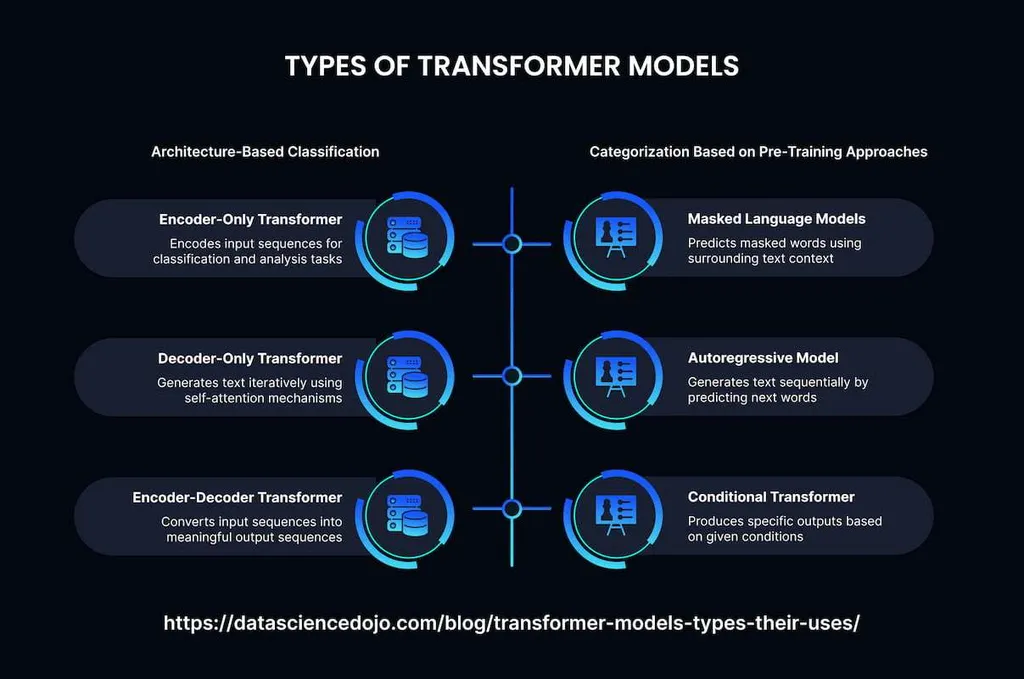

Even though TMs were invented only recently, they have already become a part of complex architectures. Below, we have described the main varieties that exist now:

- Bidirectional TMs are built to analyze words in the whole sentence instead of looking for the meaning of an isolated segment. The model processes left-to-right and right-to-left token sequences, which facilitates interpretation and understanding. BERT models allow one to create HR chatbots designed to answer challenging staff queries.

- Generative TMs rely on transformer decoders trained on large volumes of textual data. They use autoregression to generate accurate predictions and foresee the continuation of an input sentence.

- Hybrid models combine the capabilities of BERT and GPT to read the whole sentence and produce a single output token at a time.

- Vision TMs classify pictures by converting their data into patches and processing them with the help of a transformer encoder. They generate images and handle multimodal issues.

AI systems can use different transformer models together, depending on the task at hand. However, they have high memory requirements, which makes them too expensive for small and medium-sized businesses. Besides, many models are not fully transparent. It might be impossible to understand why they come to certain conclusions. The performance of each LLM heavily depends on the quality of data used during the training stage. This is why many businesses outsource the task of building custom LLMs to reputable providers.

Final Thoughts

Chatbots, AI-driven virtual assistants, content generation services, and modern search engines become more efficient thanks to transformer models. These tools provide recommendations regarding employees’ personal growth, handle complex issues, and generate industry-relevant outputs. They recognize sentiment accurately and analyze a client’s tone to understand the urgency of the request and come up with the most satisfying solution. HR and IT professionals use AI chatbots based on TMs to automate tasks, optimize workflows, and scale their operations.

Custom LLMs developed by MetaDialog allow companies to handle an increased volume of requests without bloating the staff and increase CS team productivity by 5 times. Our system can help a company reduce average resolution time and increase customer satisfaction. Get in touch with our team and integrate advanced LLMs into your workflow.