Enterprises increasingly often rely on large language models (LLMs) to automate tasks, research and organize data, serve customers, and optimize routines. However, even the most powerful systems are focused on generating human-sounding responses and aren’t designed to search for data on other sites. In this guide, we will answer the popular question: “What is Model Context Protocol (MCP)?” We will explore how it enables LLMs to communicate with third-party platforms.

What Is Model Context Protocol?

MCP is an innovative standard that facilitates building context-aware apps. It does not require developers to create a custom linkage of an LLM and tools. The standard was developed by Anthropic to use existing function calling without interruptions. Designed to streamline AI app building, it has more potential uses than just to solve the issue of isolated interfaces.

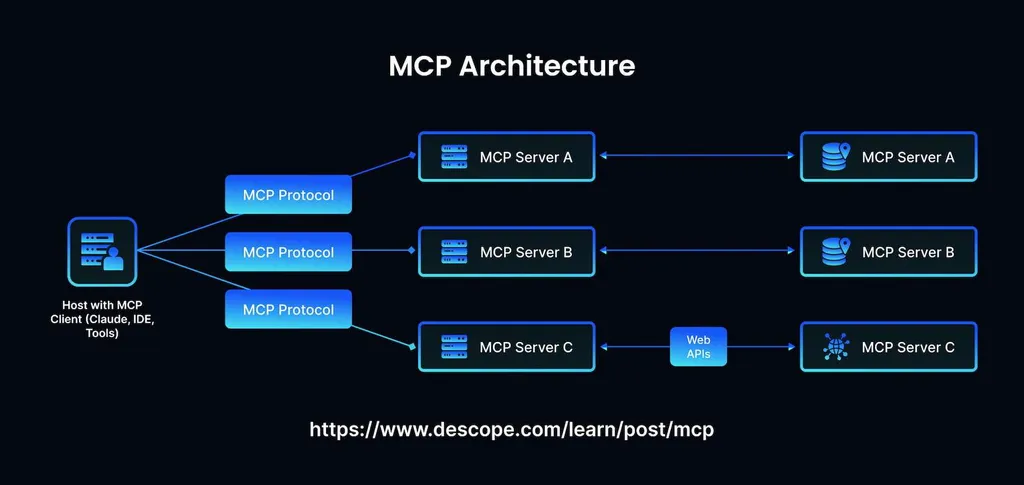

MCP client-server architecture relies on the model, comprising three main elements:

- Host. When an app receives a query, it utilizes the protocol to clarify the context. The interoperability layer utilizes orchestration logic and facilitates initiating a connection to a database.

- Client. It serves as an intermediary between the host and the server and converts queries into a special format that is read and processed by the protocol. Several clients may coexist within the same node. However, each of them has a unique connection to a server. Clients handle sessions, reconnect, fix errors, verify the relevance of each response, and solve issues with closed sessions.

- Server. The outside service transforms requests into server actions and provides the LLM with information about relevant context. Slack and GitHub are common examples of successful linkages. Nodes function as GitHub repositories. They are accessible in different languages and streamline the usage of tools.

This structure explains the versatility of practical scenarios. The protocol simplifies AI workflows and application development, enabling one to build a resilient app infrastructure. After its release, MCP has been significantly improved. It now has extra capabilities, augmenting its functionality. Some widely used MCP marketplaces already have thousands of servers, and the number is growing steadily. The extensive digital environment includes:

- Claude. The native app with standard support.

- Console.

- Cursor, an AI-based IDE with seamless deployment.

- Windsurf, or Codeium.

Besides, the infrastructure comprises Apple, JetBrains, Microsoft, and other widely used IDEs. Their release has expedited the adoption of the technology among enterprises.

Benefits of MCP

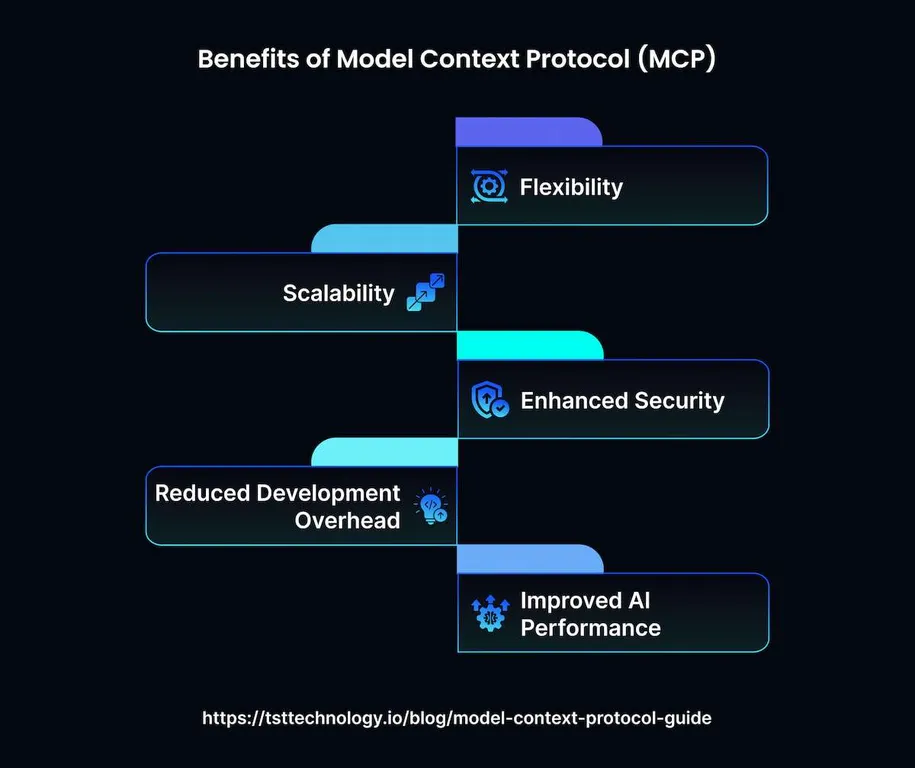

Many businesses recognize the upsides of using algorithm-based solutions to schedule meetings, provide detailed updates about inventory levels, and summarize conversations. However, enterprises typically rely on different service providers, each with unique APIs and outputs. When one of these tools gets updated, it may cause significant disruptions in AI workflows.

Engineers need to establish connections between different tools manually, perform debugging, and use API keys and permissions to streamline authentication. Such bridges may become unreliable when features are updated and improved. The innovative solution permits developers to use a special layer between models and programs. It simplifies converting the tool outputs and ensuring the LLM will be able to read them.

Here are some other advantages of this specification:

- Streamlined multi-agent orchestration;

- Improved retrieval augmented generation (RAG);

- No need for direct API integrations.

MCP is predicted to have many more applications in the future. It simplifies LLM features usage within one infrastructure and empowers AI agents to operate independently and adapt to changing real-world conditions. Companies use the standard to simplify convoluted agentic workflows and minimize human intervention. It allows teams to focus on complex tasks, requiring a creative approach.

The innovative potential of the new approach lies in the fact that it makes it quite convenient to link AI bots with repositories. It lets LLMs generate more accurate outputs relevant to a specific situation.

How to Implement MCP

Companies were quick to recognize the potential applications of the new protocol. However, adopting this solution requires solid technical skills. One should follow such steps to switch to a new set of rules, build connectors, and thoroughly test them:

- Install the available servers using Claude.

- Build a custom service.

- Update open-source repositories of connectors.

As it might be daunting to implement the new approach, many businesses outsource this task to authoritative service providers. It allows them to save money and achieve high-quality results without spending significant resources.

When more companies start to implement AI assistants, they will need to invest more in LLM capacities to augment the AI models’ reasoning capabilities. Establishing a partnership with trusted providers that specialize in LLM development and have extensive knowledge about the implementation of new interface standards will help them avoid data silos and common issues that occur when bots become a part of legacy systems.

MCP has already become a popular open standard for establishing a secure connection between systems and data inputs. While it might be challenging to implement, it can significantly streamline access to isolated data stored on third-party platforms.

Common Challenges Solved by the New Protocol

When enterprises started to adopt AI technology, they discovered some limitations of LLMs. One of the issues is caused by the isolation of such systems from platforms with current data.

LLMs are trained on high-quality datasets, but it’s difficult to update them often. As a result, end users may get irrelevant answers to their questions. They need to collect information on their own and paste it into a dialog window to receive a reply from a chatbot. While several models already support AI-driven web search, they still do not have access to many knowledge bases and utilities. This problem is common even for popular solutions.

Many enterprises also have to deal with the “NxM challenge” concerning LLMs and special modules. Each provider that specializes in building AI models relies on its own solutions to communicate with all sorts apps. It complicates interoperability.

The problem leads to such notable issues:

- Complicated workflows. Developers have to solve the same tasks for each model or endpoint. They have to spend a lot of time writing code from scratch. With each component they integrate, they have to start anew and write more code to ensure seamless functioning.

- Maintenance issues. Once modules and APIs get upgraded, companies need to spend additional resources to ensure their functioning within a framework. Unless one uses a standardized approach, the product may malfunction.

- Fragmentation. Developers struggle with inconsistency issues caused by different linkages.

MCP can potentially solve the NNxM problem by offering a new way to connect AI applications to databases and making their replies more relevant. Calling APIs from LLMs allows developers to streamline routines and achieve higher consistency.

How MCP Functions

When a user sends a query to artificial intelligence applications that support MCP, their request launches several important processes. Systems start communicating with third-party databases to solve the requests they receive. If a user asks Claude to use resources available outside the chat, it launches a series of events:

- Establishing a connection. Claude Desktop or another similar interface starts running. It connects to the servers on a user’s device.

- Learning about the available capabilities. The client transmits requests to each server to learn its capacities. This allows it to obtain data about the components, prompts, and resources available for each server.

- Registration. The client registers discovered capabilities, which allow an AI bot to access and use them when communicating with a user.

When a user request is sent and processed, an AI system needs to follow several steps to produce a relevant reply. Here are the main stages:

- Recognizing the request. The chatbot analyzes a question and understands that its training datasets did not include a reply. It realizes that it needs to access real-time data stored on other platforms.

- Choosing the most suitable assets and capabilities. The chatbot decides to use an MCP to answer the query.

- Requesting permission. The client uses a special prompt to ask a user whether they agree to access the necessary tools.

- Exchanging data. When a user agrees, the client chooses a suitable node and sends a request in a widely adopted format supported by the standard. This stage is obligatory, as it allows systems to remain compliant and handle data with a user’s explicit permission.

- Processing a request. The MCP server handles the request and performs the necessary actions. It may contact a platform with weather reports, analyze information in a file, or search a database.

- Returning results. The server sends the collected information to the client.

- Integrating relevant context. The chatbot analyzes the gathered data and provides a reply to the user.

Even though the process contains multiple stages, it is typically finalized within a few seconds.

New Developments and Potential Improvements

The innovative protocol has already significantly transformed the way LLMs operate. It made their performance faster and simplified data retrieval. MCP has transformed the whole framework and introduced a convenient format for linkages. It helps large companies and individuals create viable systems and add elements without rewriting code. It saves a lot of time, enabling employees to focus on priority tasks.

Experts have emphasized the importance of the following developments that can further improve MCP:

- Safe elicitation. Many people are concerned about software accessing sensitive data when they handle financial transactions, verify IDs, or check other information. A new URL mode will allow such interactions to circumvent the client.

- Progressive scoping. Some users complained about the potential risks associated with scoping. They recommended starting to use an MCP-related attribute for a scopes_default parameter in PRM.

- CIMD will be stored at specific web addresses. It will empower OAuth clients to utilize these URLs as identifiers. It will allow servers to work with clients even if they do not have a pre-established relationship. This strategy is expected to improve authorization procedures. There won’t be any need to use only pre-registration or DCR.

These changes are predicted to expedite the adoption of the innovative approach and make products more reliable.

Final Thoughts

MCP is used to link LLMs with apps and systems. It fixes fragmentation issues, makes apps’ performance consistent, and may potentially resolve the current challenges. For apps, it offers an efficient way to communicate with all sorts of tools and collect valuable information updated in real time. It makes chatbot outputs reliable. App developers use MCP to reduce their workload, increase interoperability of their systems, and avoid siloed data.

After learning an answer to the question “What is model context protocol?”, it’s time to implement it to simplify the LLM and switch to a popular format for linking its elements. The assistance of authoritative providers like MetaDialog allows enterprises to build custom LLMs and ensure quick integration with existing systems. Get in touch with our team and discover how to optimize your processes by deploying advanced LLMs designed to simplify convoluted processes and automate operations to save resources.